Kevin Hou on Google DeepMind's Antigravity

My thoughts on Kevin Hou's talk about Antigravity, DeepMind's new agent-first development platform.

Google DeepMind has definitely gotten my attention with significant releases over the past few weeks, in particular Gemini 3 Pro, Gemini 3 Pro Image aka Nano Banana Pro, and most recently Antigravity. Based on this talk by Kevin Hou at the recent AI Engineer Code Summit, as well as the ThursdAI podcast’s chat with Hou recorded at the same event, I’ve made it a priority to get hands-on with Antigravity, and find it to be a new and different take on the coding-with-AI experience. If, with Claude Code, Anthropic’s message was:

We don’t know what the experience should be, so we’re making Claude Code an extremely thin wrapper around our great coding models.

Google DeepMind is telling us:

We think we know what the next step function should look like, here’s Antigravity.

Below find my commentary and highlights from Kevin Hou’s talk.

What Is This Thing?

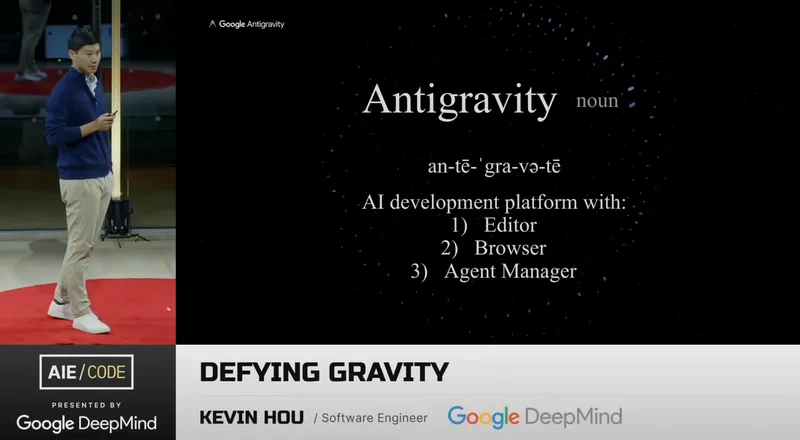

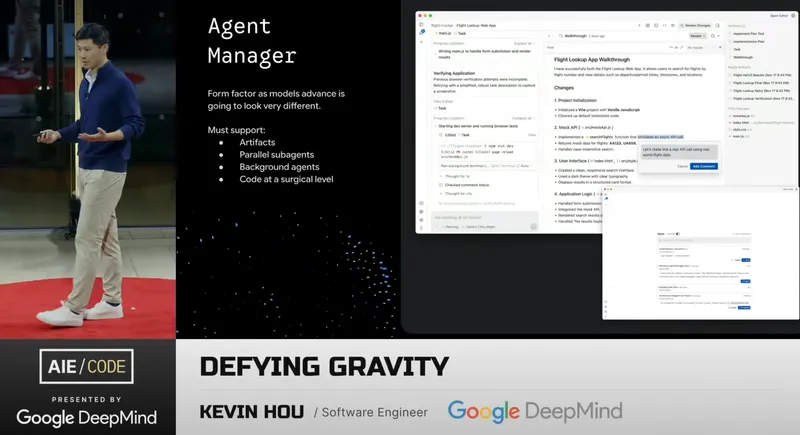

Antigravity is definitely not a thin wrapper around a great coding model. It is, rather, on the other end of the spectrum: a development platform whose feature set includes elements that go beyond existing alternatives, even “thick” ones. It is “Unapologetically Agent-First” with an uber-overlord Agent Manager:

Hou introduces Antigravity as a platform with three main components:

Two of these components are, effectively, “new things” in an AI Coding tool. Other platforms can “do browser stuff” and might have some agent/subagent action happening, but no major player delivers browser and agent manager elements that approach the level of power and integration that I’m seeing in Antigravity.

Diving Deeper on the Three Surfaces

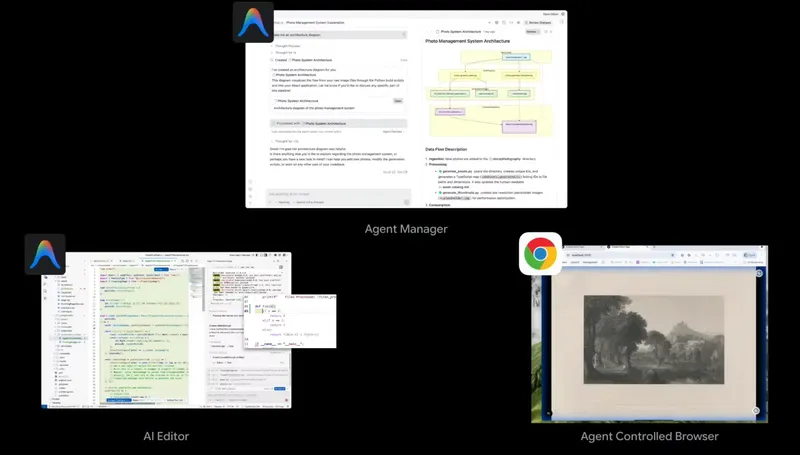

This more detailed view begins to expose a bit more about the three components (click to zoom the image):

It’s an AI developer platform with three surfaces. The first one is an editor. The second one is a browser. And the third one is the agent manager. So we’ll dive into what this means, and what each looks like. So a paradigm shift here is that agents are now living outside of your IDE. And they can interact across many different surfaces that your agent or that you as a software developer might spend time in.

The Agent Manager

Hou tells us:

Let’s start with the agent manager. So that’s the thing up top. This is your central hub. It’s an agent-first view, and it pulls you one level higher than just looking at your code. So instead of looking at diffs, you’ll be kind of a little bit further back. And at any given time, there is one agent manager window.

To me, what resonates is “one level higher.”

The Editor

Hou’s enthusiasm for the Editor is not that it’s a sexy feature, but that it is a practical, utilitarian tool for an important job, and has been optimized for that job, e.g. with 100ms load time:

Now you have an AI editor. This is probably what you’ve grown to love and expect. It has all the bells and whistles that you would expect. Lightning fast, auto-complete. This is the part where you can make your memes about, yes, we forked VS Code. And it has an agent sidebar. And this is the sort of thing. It’s mirrored with the agent manager. And this is when you need to dive into your editor to accomplish maybe your 80% to 100% of your task. And at any point, we made it very, very easy because we recognized not everything can be done purely with an agent. For you to command E or control E and hop instantly from the editor into the agent manager and vice versa. And this takes under 100 milliseconds, it’s zippy.

A key note: the editor has an agent sidebar, which is not the Antigravity Agent Manager—it’s more like the Cursor agent sidebar, just a way to work closely with a smart coding model while staring at your source files. The Agent Manager is the agent overlord, one level higher.

Also notable: the Antigravity Editor is a VS Code fork, but where Cursor looks like a VS Code fork, Editor does not: the UI feels (more) different and the feature set seems more obviously changed.

The Browser

And then finally, something that I love, an agent-controlled browser. This is really, really cool. And hopefully for the folks in the room that have tried Antigravity, you’ve noticed some of the magic that we’ve put in behind here. So we have an agent-controlled Chrome browser. And this gives the agent access to the richness of the web. And I mean that in two ways. The first one, context retrieval, right? It has the same authentication that you would in your normal Chrome. You can give it access to your Google Docs. You can give it access to, you know, your GitHub dashboards and things like that and interact with a browser like you would as an engineer. But also, what you’re seeing on the screen is that it lets you, it lets the agent take control of your browser, click and scroll and run JavaScript and do all the things that you would do to test your apps.

So here, I put together this like random artwork generator. All you do is refresh and you get a new picture of like a piece of Thomas Cole artwork. And now we added in a new feature, which is this little modal card. And the agent actually went out and said, okay, I made all the code. But instead of showing you a diff of what I did, let’s instead show you a recording of Chrome. So this is a recording of Chrome where the blue circle is the mouse, it’s moving around the screen, and in this way you get verifiable results. So that’s what we’re very excited about, our Chrome browser.

Antigravity still has some sharp edges, and in my experience the sharpest of those are in the Antigravity browser integration: it’s buggy at times, not as speedy as it could be, and not as smart as it should be. But it’s breaking new ground and I’m hopeful Google will actually prioritize Antigravity as a product and be serious about improving it. We’ll see.

Back to the Agent Manager

Hou circles back to the Agent Manager, the component that ties it all together:

The Agent Manager can serve as your control panel. The editor and the browser are tools for your agent. And we want you to spend time in the Agent Manager. And as models get better and better, I bet you you’re going to be spending more and more time inside of this Agent Manager.

And it has an inbox … It lets you manage many agents at once. So you can have things that require your attention. For example, running terminal commands … There are probably some commands that you want to make sure you hit okay on. So things like this will get surfaced inside of this inbox. One click, you can manage many different things happening at once. And it has a wonderful OS level notification. This solves that problem of multi-threading across many tasks at once.

That the Agent Manager has an inbox highlights the message that this isn’t just an agent, it’s an orchestrator.

Why Did We Build It?

Hou dives into this question next:

Why did we build the product? How did we arrive at this sort of conclusion? You might say, oh, adding in a new window, it’s pretty random, right?

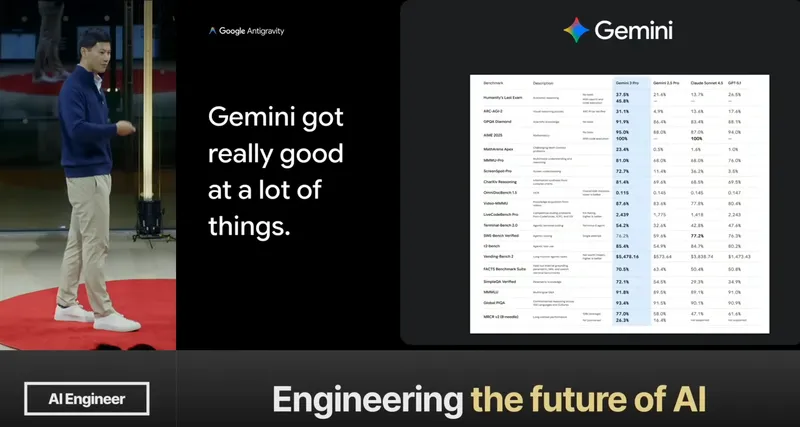

One part of his answer is that, after showing huge progress in Gemini 2.5 Pro (I recall watching Google’s announcement live at the AI Engineer World’s Fair in San Francisco June 2024), Google now has a genuine contender for top coding model in Gemini 3 Pro. And if they didn’t, Antigravity might not exist. If Gemini 3 Pro was a noncompetitive coding model, Google wouldn’t be showcasing weakness by launching Antigravity:

The product is only ever as good as the models that power it … Every year there is this sort of new step function. The first, there was a year when it was autocomplete, right? Co-pilot. And this sort of thing was only enabled because models suddenly got good at doing the short form autocomplete. And then we had chat. We had chat with RLHF. Then we had agents. So you can see how every single one of these product paradigms is sort of motivated by some change that happens with model capabilities.

Hou also emphasizes the benefits of being right there in DeepMind next to the Gemini folks:

And it’s a blessing that our team … embedded inside of DeepMind. We had access to Gemini for a couple of months earlier, and we were able to work with the research team to basically figure out, you know, what are the strengths that we want to show off in our product? What are the things that we can exploit … Where are the gaps in the model, and how can we fix that, right? And so this is a very powerful part of why Antigravity came to be.

Four Categories of Improvement

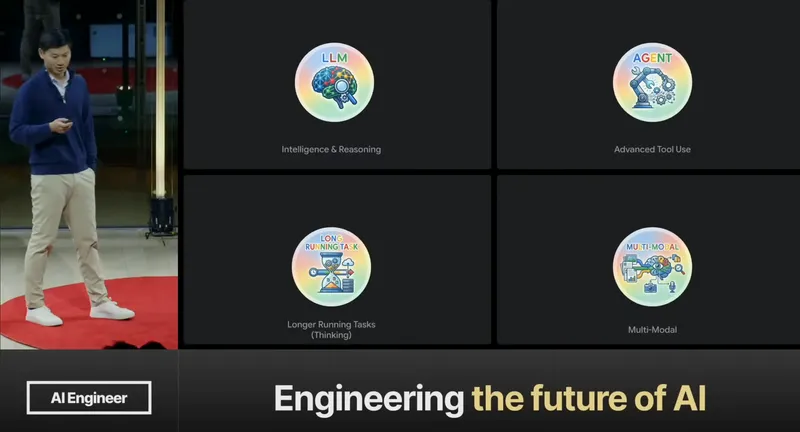

Using some Nano Banana Pro output, Hou highlights four categories of improvement in Gemini 3 Pro:

The first one is intelligence and reasoning. You all are probably familiar with this. You used, or used Gemini 3, and you probably thought it was a smarter model. This is good. It’s better at instruction following.

It’s better at using tools. There’s more nuance in the tool use. You can afford things like, you know, there’s a browser now. There’s a million things that you could do in a browser. It can literally even execute JavaScript. How do you get an agent to understand the nuance of all these tools?

It can do longer running tasks. These things now take a bit longer, right? And so you can afford to run these things in the background. It thinks for longer. Just time has gotten stretched out.

And then multimodal. I really love this property of what Google has been up to. The multimodal functionality of Gemini 3 is off the charts, and you start combining it with all these other models like Nano Banana Pro, and you really get something magical.

Tiptoeing Towards AGI

Tongue-in-cheek, Hou describes how Antigravity is stepping towards AGI and identifies three steps:

Step One: Raise the Ceiling

… of what the agent can do.

So step one is we want to raise the ceiling of capability. We want to aim higher, have higher ambition. And so a lot of the teams at DeepMind were working on all sorts of cutting-edge research.

Hou calls out Google’s extensive research into computer use, in particular browser use, as the first critical bar-raiser in Antigravity:

Browser use has been one of these huge unlocks. And this is twofold. I mentioned the sort of retrieval aspect of things. It’s reasonable for the model to now give in context that can generate the code that hopefully functionally works. And then you’ve got the what to build. There’s this richness in context, the richness and institutional knowledge. And these are the sorts of things that having access to a browser, having access to your bug dashboards, having access to your experiments, all these sorts of things that now gives the agent this additional level of context.

This is now the other side of things. Browser as verification. So you might have seen this video. This is the agent. The blue border indicates that it’s being controlled by the agent. And so this is a flight tracker. You put in, you know, a flight ID and then it’ll give you sort of the start and end of that flight. And this is being done entirely by a Gemini computer use variant. So it can click, it can scroll, it can retrieve the DOM, it can do all the things. And then what’s really cool is you end up with not just the diff, you end up with a screen recording of what it did. So it’s changed the game and the model can take this and because it has the ability to understand images, it can take this and iterate from there. So that was the first category, browser use, just an insane, insane magical experience.

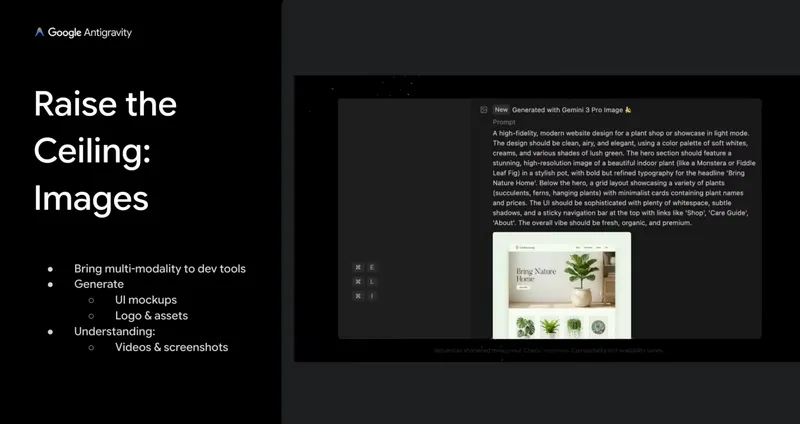

Hou’s second “raise the ceiling” focus is images, which these days means Gemini 3 Pro Image aka Nano Banana Pro.

I hadn’t connected the dots as to why Nano Banana Pro might be a big deal for development. Yes, you could easily generate some really spiffy architecture diagrams, but that’s a pretty small win. But I now understand that the value is much, much higher.

Now the second place that we wanted to spend time is on image generation. Gemini is spending a lot of time on multimodal. And this is really great for consumer use cases, right? Nano Banana2 was mind boggling. But also for devs. Devs are inherently, this is a multimodal experience. You’re not just looking at text, you’re looking at the output of websites, you’re looking at architecture diagrams, there’s so much more to coding than just text. And so there’s image understanding, this is verifying screenshots, verifying recordings, all these sorts of things. And then the beautiful part about Google is that you have this synergistic nature. This product takes into account not just Gemini 3 Pro, but also takes into account the image side of things. And so here

I had to watch this part of the talk about five times, first to figure out how you even get to NBP (answer: from Agent Manager, just ask for something visual).

But secondly, to get my head around why Hou was claiming that Nano Banana Pro was a big win for developers. I’ve spent a lot of time using NBP, and been repeatedly amazed at what it was creating for me. But I hadn’t made the connection between NBP’s incredible image generating power and the UI design process that is a critical element of virtually all application development work. It’s the design, silly!

I want to give you a quick demo of mockups. I have a hunch and you all probably believe this too. Design is going to change, right? You’re going to spend maybe some time iterating with an agent to arrive at a mockup. But for something like, oh, let’s build this website, we can start in image space. And what’s really cool about image space is it lets you do really cool things like this.

In this case, I’ll show you my example instead of Hou’s. My initial Antigravity project involves ejecting a project from the Bolt.new platform so we could regain control of the development process, which had proven itself to be out-of-control unstable in Bolt. The webapp in question is a simple secure data sharing tool, and I worked with Antigravity through the process of unbolting Bolt and several rounds of code reviews for security and robustness. I didn’t actually intend to redo the UI, but here I was, in Agent Manager, with a working app that had a very plain UI. So I tiny-prompted

generate a new UI look and feel for me to consider for this app

and, one-shot, got this:

I make no endorsement, express or implied, of the model’s self-described “Dark Glassmorphism” theme, but still, this is a really impressive app theme, almost viable as a first design iteration, from a 14-word prompt that provided no stylistic guidance. As I discovered on re-watch #27, the Agent Manager intelligently orchestrates here, using Gemini 3 Pro to transform my 14 words into a 16-line, 153-word Nano Banana Pro prompt that includes guidance on fidelity, layout, style, and mood:

A high-fidelity UI design for a secure file upload web application. Modern, clean

aesthetic. Dark mode with deep slate blues and vibrant accent greens (referencing

the '[The Organization Name Here]' perhaps, but keeping it tech-focused).

Layout:

- Left Sidebar: Navigation links (My Files, Shared with Me, Settings, Admin Panel).

User profile at the bottom.

- Main Content Area:

- Top header: Search bar, notifications, and 'Upload New File' button.

- Quick Stats Cards: 'Storage Used', 'Total Encrypted Files'.

- Central Area: A large, elegant drag-and-drop zone with a subtle dashed border

and cloud icon.

- Recent Files List: A table-like view showing file icons (PDF, IMG), filenames,

size, upload date, and a 'lock' icon indicating encryption.

- Style: Glassmorphism effects on the sidebar and cards. Smooth gradients. Crisp typography (Inter or Roboto).

- Mood: Trustworthy, secure, professional, yet easy to use.And cool gets even cooler—note that I selected a region of the image and added a comment. Hou:

We can add comments. And so you end up commenting and leaving a bunch of queued up responses. And it’s kind of like GitHub. You’ll just say, all right, now update the design. And then it’ll put it in here. The agent is smart enough to know when and how to apply those comments. And now we’re iterating with the agent in image space. So really, really cool new capability. And what was awesome is that we had Nano Banana Pro.

Those comments feed right back to the Agent Manager, which is then fully capable of building what Nano Banana designs. The resulting application would almost certainly have a much better design, and be far more robust and maintainable, than anything a prompt-to-app tool like Bolt could produce.

Step Two: Age of Artifacts

… long live the agent manager.

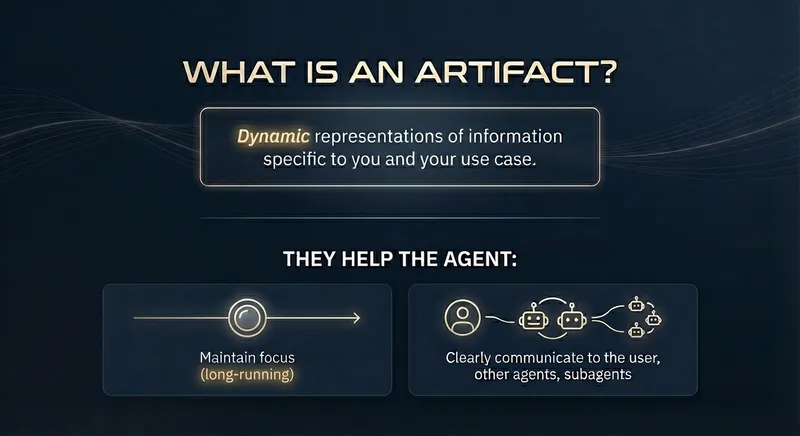

Hou calls out Antigravity’s Artifacts concept as the second critical bar-raiser. Understanding the full impact and implications of Artifacts also took me a few replays to grasp. I liked the idea of artifacts, especially their multimodal nature. But I didn’t get the “long live the agent manager” tagline at first.

Hou introduces Artifacts this way:

And so step two was, all right, we have this new capability. We’ve pushed the ceiling higher. Agents can do longer running tasks. They can do more complicated things. They can interact on other surfaces. And so this necessitates a new interaction pattern. And we’re calling this Artifacts. This is a new way to work with an agent. And this is one of my favorite parts about the product. And at its core is this agent manager. So let’s start by defining an Artifact.

I love Hou’s Artifact definition; I created my own image for it using Nano Banana Pro:

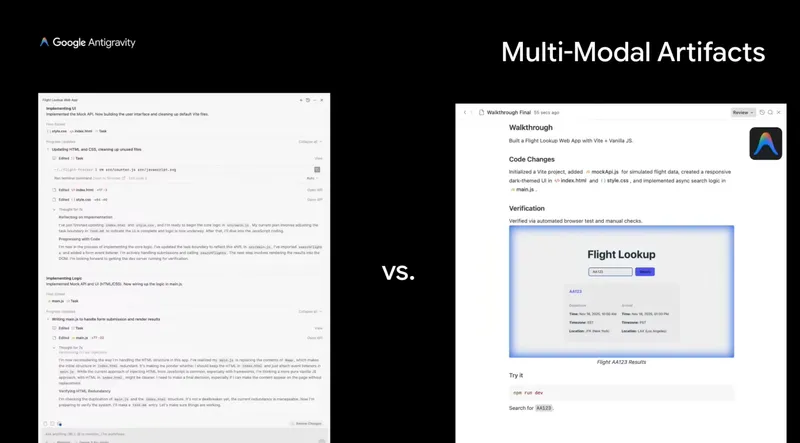

This is what you see on the right side of this agent manager. We’ve dedicated sort of half the screen and your sidebar to this concept of Artifacts.

We’ve all tried to follow along chain of thought [left side]. And I would say this, you know, we did some fanciness here inside of the agent manager to make sure conversations are broken up into like chunks. So in theory, you could follow along a little bit better in the conversation view, but ultimately you’re looking at a lot of strings, a lot of tokens. This is very hard to follow. And then there’s like 10 of these, right? So you just scroll and scroll and you’re like, what the heck did this agent do? And this has been traditionally the way that people review and supervise agents …

But isn’t it much easier to understand what is going on inside of this [right side] visual representation? And that is what an Artifact is.

The whole reason why I’m not just standing up here and giving you this long stream of consciousness is because I have a PowerPoint. The PowerPoint is my Artifact. Gemini 3 is really strong with this sort of visual representation, it’s really strong with multimodal. So instead of showing this [left side], which of course we always show you, but we want to focus on this [right side]. And I think this is the game-changing part about Antigravity. And the theme is this dynamicism.

As I started to digest this, the connection between the powerful Artifacts abstraction, and an Agent Manager capable of long-running orchestration of my projects, began to really sink in.

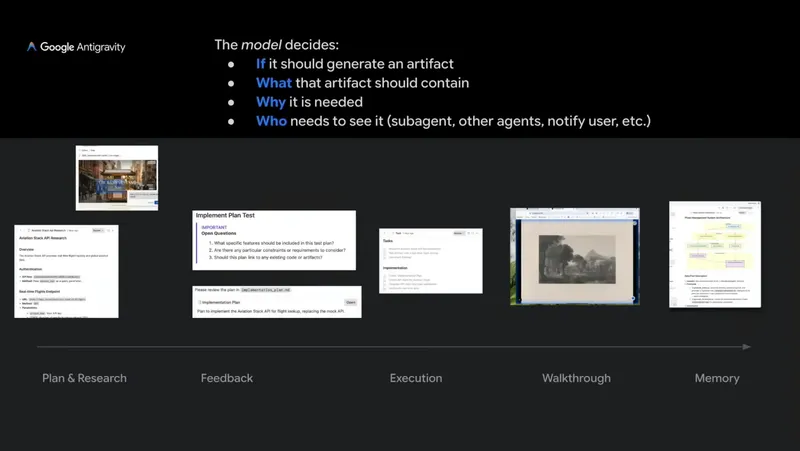

Hou emphasizes that the model chooses if, what, why, and for whom artifacts are created.

The model can decide if it wants to generate an artifact. And let’s remember there are some tasks. We’re changing a title. We’re changing something small. It doesn’t really need to produce an artifact for this. So it will decide if it needs an artifact. And then second, what type of artifact? And this is where it’s really cool. There are many potential infinite ways that it can represent information.

Common ones are markdown in the concept of a plan and a walkthrough … When you start a task, it will do some research. It will put together a plan. This is very similar to like a PRD. It will even list out open questions. So you can see in this feedback section, it’ll surface, hey, you should probably answer these three questions before I get going.

And what’s really awesome—and we’re betting on the models here—is that the model will decide whether or not it can auto-continue. If it has no questions, why should it wait? It should just go off. But more often than not, there are probably areas where you may be under-specified … So it’ll surface open questions for you. And so you’ll start with that implementation plan.

It might produce other artifacts. We’ve got a task list here. It might put together some architecture diagrams. And then you’ll get a walkthrough at the end. And this walkthrough, you kind of saw a glimpse of this before. But it is, hey, how do I prove to you, agent to human, that I did the correct thing and I did it well. And then this is the part that you’ll end with. It’s kind of like a PR description.

And then there’s a whole host of other types, right? Images, screen recordings, these mermaid diagrams. And really what’s quite cool is that because it’s dynamic, the agent will decide this over time. So suddenly there’s maybe a new type of artifact that we maybe would have missed. And then it’ll figure that out. It’ll just become part of the experience. It’s very scalable.

This artifact primitive is something that’s very, very powerful that I’m pretty excited about.

Hou circles back to Artifact comments, explaining how they are available across all types of Artifacts, all modalities:

Another very cool property of this Artifact system: we want to be able to provide feedback along this cycle. So from task start to task end, we want to be able to provide feedback and inform the agent on what to change. The Artifact system lets you iterate with the model more fluidly during this process of execution. Not to sound like a complete Google shill, but I love Google Docs, right? Google Docs is a great pattern—the comments are great. This is how you might interact with a colleague. You’re collaborating on a document, then all of a sudden you want to leave a text-based comment. So we took inspiration from that.

We took inspiration from GitHub. You leave comments. You highlight text. You say, hey, maybe this part needs to get ironed out a bit more. Maybe there’s a part that you missed or actually don’t use Tailwind, use Vanilla CSS. So these are the sorts of comments that you would leave. You’d batch them up and then you’d go off and send. And then in image space, this is very cool. We now have this Figma style, highlight to select. And now you’re leaving comments in a completely different modality, right?

We’ve done this and instrumented the agent to naturally take your comments into consideration without interrupting that task execution loop. So at any point during your conversation, you could just say, oh, actually, I really don’t like the way that that turned out. Let me just highlight that, tell you, send it off, and then I’ll just get notified when you’re done taking into consideration those comments.

Hou finally then takes a step up to our uber Agent Manager, making the point that the “form factor as models advance is going to look very different”:

It’s a whole new way of working. This is at the center of what we’re trying to build with Antigravity. It’s pulling you out into this higher level view. And the Agent Manager is built to optimize the UI of artifacts. We have a beautiful Artifact review system. We’re very proud of this. And it can also handle … parallelism and orchestration. So whether this be many different projects, whether this be the same project and you just want to execute a design mock-up iteration, at the same time you’re doing research on an API, at the same time you’re iterating and actually building out your app, you can do all these things in parallel. And the artifacts are the way that you provide that feedback. The notifications are the way that you know that something requires your attention. It’s a completely different pattern. And what’s really nice is that you can take a step back.

Of course, you can always go into the editor. I’m not going to lie to you. There are tasks that you maybe don’t trust the agent yet. You don’t trust the models yet. And so you can Command-E and it will open inside the editor within a split second with the exact files, the exact artifacts, and that exact conversation open ready for you to auto-complete away to continue chatting synchronously to get you from 80% to 100%. So we always want to give devs that escape hatch.

But in the future world—we’re building for the future—you’ll spend a lot of time in the Agent Manager working with parallel subagents. It’s a very exciting concept.

Step 3: Be Your Biggest User

… research / product flywheel

Okay. So now that you’ve seen, we’ve got new capabilities, multitude of new capabilities. We’ve got a new form factor. Now the question is like, what is going on under the hood at DeepMind? And the secret here is a lesson that I guess we’ve just learned over the past. I don’t know. We’ve spent like, I personally spent like three years in CodeGen. It’s just to be your biggest user, right?

And that creates this research and product flywheel. And so I will tell you, Antigravity will be the most advanced product on the market because we are building it for ourselves. We are our own users. And so in the day to day, we were able to give Google engineers, DeepMind researchers, we were able to give them an early access and now an official access to Antigravity internally. And so now all of a sudden, the actual experience of the models that people are improving, the actual experience of using the Agent Manager and touching Artifacts is letting them see at a very, very real level what are the gaps in the model. And whether it be computer use, whether it be image generation, whether it be instruction following, right?

Every single one of these teams, and there are many teams at Google, has some hand inside of this very, very full-stack product. And so you might notice as an infrastructure engineer, you might say, oh, this is a bit slow. Well, build it for yourself, make it faster. Image gen, all of a sudden, computer use isn’t going well. It can’t click this button. It’s really bad at scrolling, really bad at finding text on the page. Well, go off and make that better, right? So it gives you this level of insight that evals just simply can’t give you. And I think that’s what’s really cool about being at DeepMind. You are able to integrate product and research in a way that creates this flywheel and pushes that frontier.

Hou drifts here, as a relatively new Google hire, into making claims and guarantees that run counter to Google’s actual behavior in the past. What Hou says Google will do, is perhaps what they should do, but have often failed to do.

I hope Google can steer a committed and steady course here, as I’m very excited by Antigravity and its underlying Gemini 3 powerplant.

Time will tell.